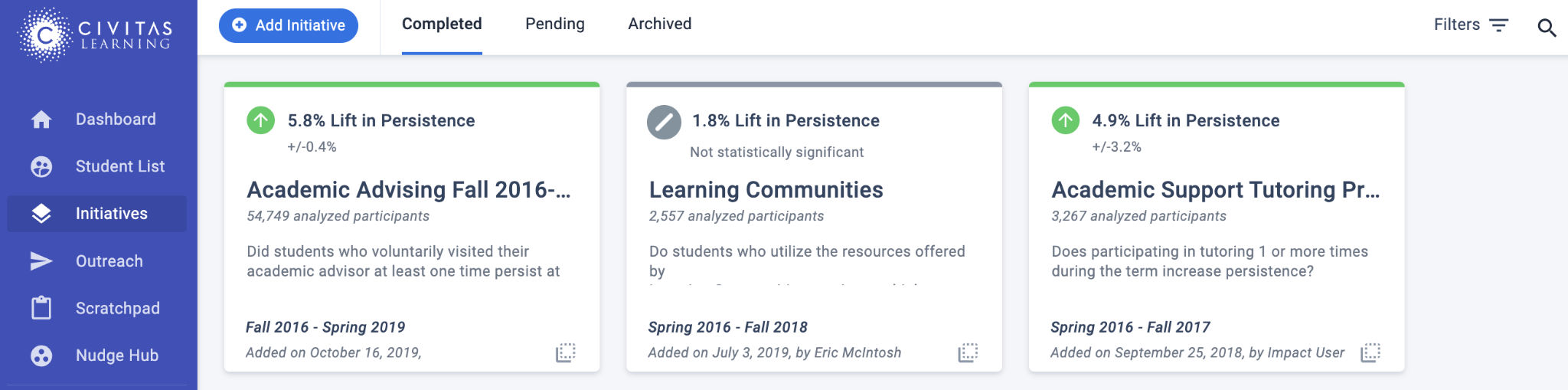

Adding a New Initiative

Start an Initiative

Click the blue ‘Add initiative’ button at the top left.

You will see the first page of a guided intro. Click the Next button to continue through the guided intro. Otherwise, click Skip Intro.

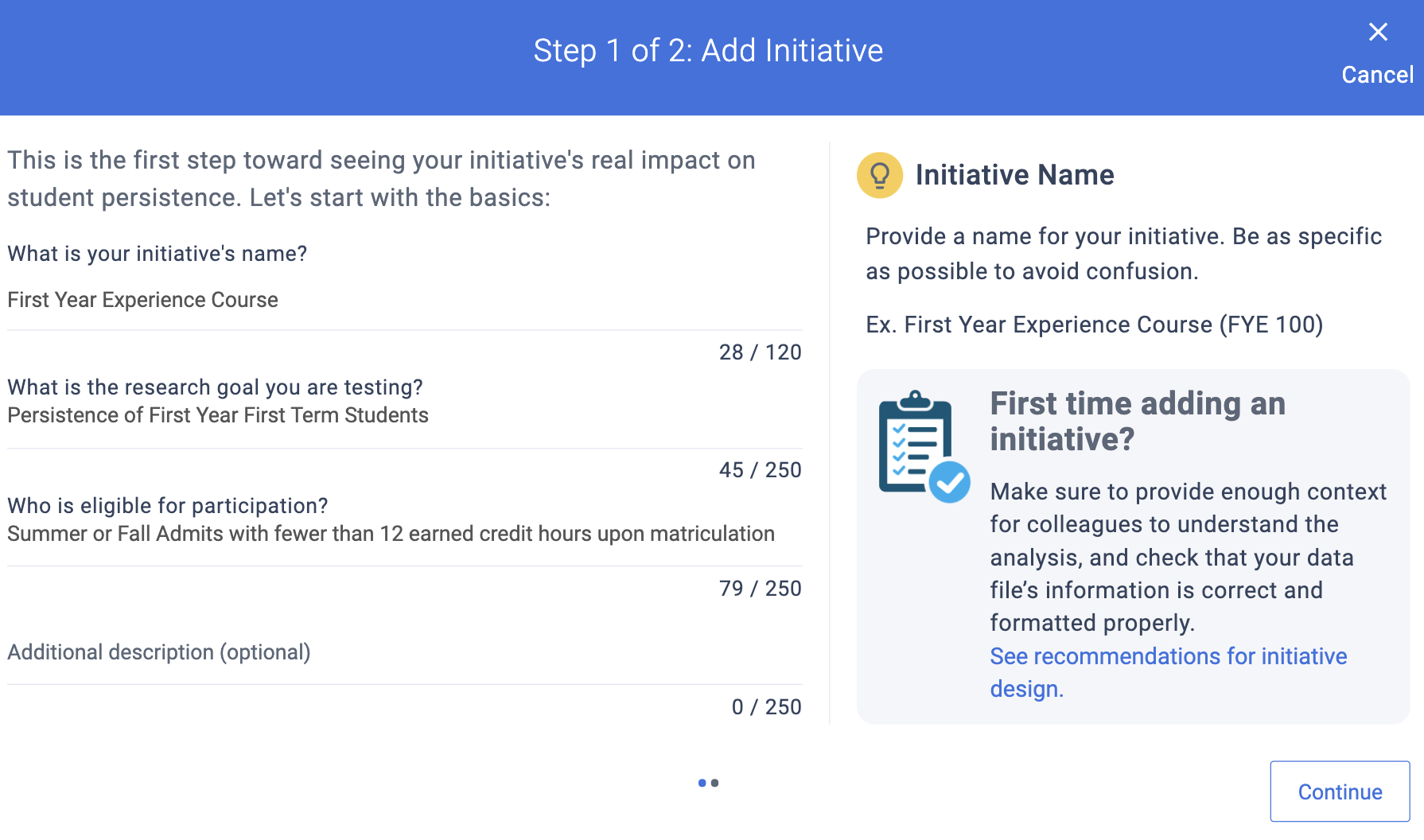

Complete the required fields describing your initiative:

- Name — Give a specific name for your initiative to differentiate it from other initiatives.

- Research Goal — Describe the hypothesis you are testing with this initiative. Consider what outcome the initiative aimed to achieve and which group of students was the focus.

- Eligibility Criteria — List criteria students must meet to participate in the initiative.

- Additional Description — Include any context about this initiative. Use this to guide your colleagues who have access to Initiative Analysis, as they will be able to see initiatives submitted by you and other users.

After completing these details about the initiative, click the Save and Continue button.

Tip

Download the Data Readiness Checklist (PDF) to save time adding the initiative.

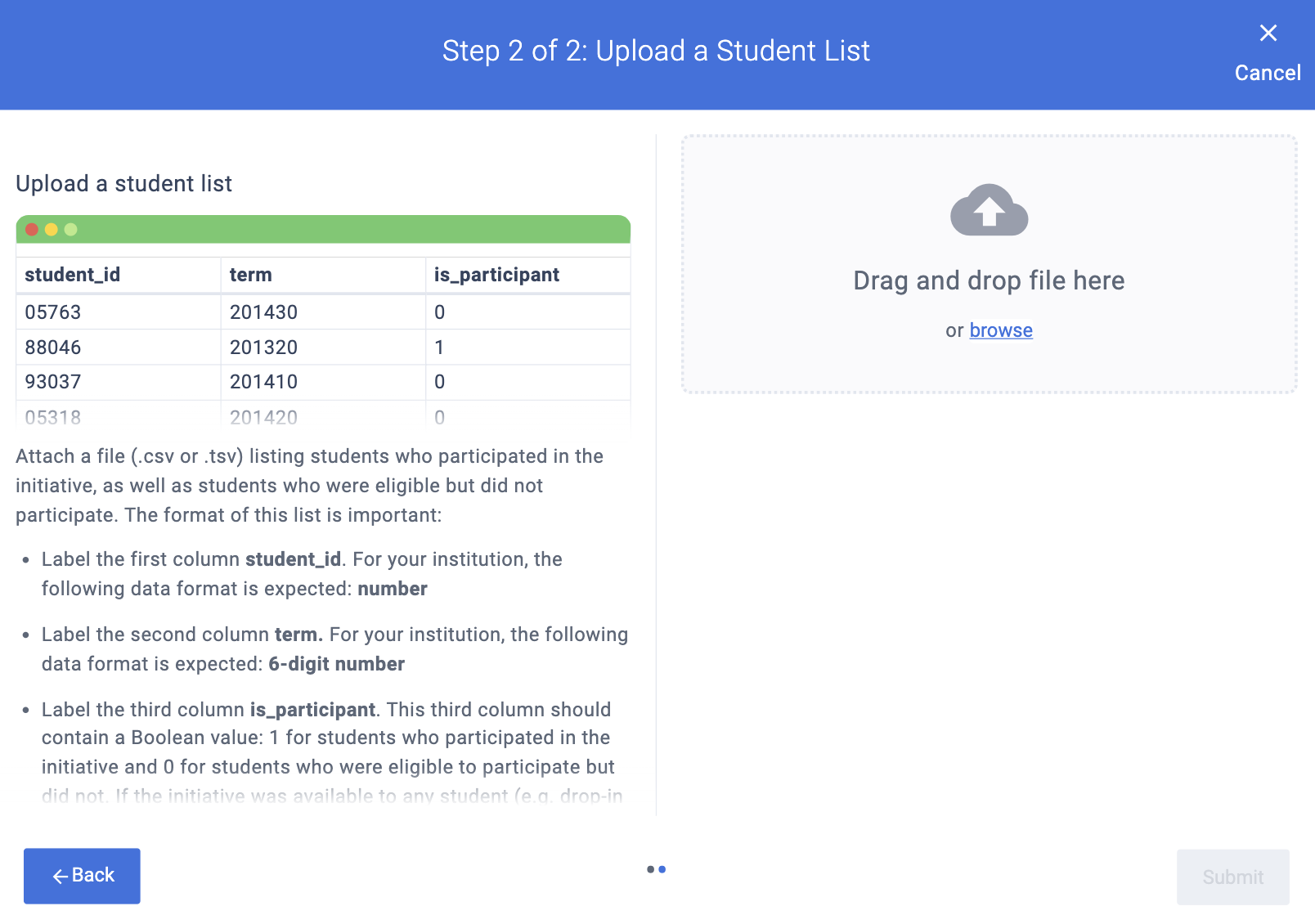

Upload a student list

After submitting details about the initiative, you’ll upload a .csv or .tsv file with details about students who were eligible to participate in the initiative. The list should include students who did participate in the initiative as well as students who were eligible but did not participate. This list of students will be used to identify similar students through PPSM for analysis. A larger number of students will maximize the number of matched student pairs after PPSM and it will increase the chance of statistically significant impact results.

How much is enough? — Technically, you can upload a list of as few as 100 students, but the lift (change in persistence rates) must be extreme (~90%) to get a significant result. For best results, have at least 1000 participant students, and be sure to include at least as many eligible comparison students as participants.

The format of this list is important:

- student_id — Use this label for the first column. Fill in the student ID numbers for students who were eligible to participate over the terms the initiative was offered.

- term — Use this label for the second column. Fill in the term during which the student participated or was eligible to participate in the initiative. If a student participated in the initiative during multiple terms, create a separate entry for each distinct term. Likewise with students who were eligible over multiple terms but did not participate, create a separate entry for each term.

- is_participant — Use this label for the third column. This is Boolean (true/false). Fill in a 1 for students who participated in the initiative and a 0 for students who were eligible but did not participate. If the initiative was open to all students, then only participating students need to be included in the file. If no eligible non-participants (that is, is_participant = 0) are identified in the file, then all other students in the terms identified in the file will be considered eligible for matching and analysis.

Student ID and term values for your institution should match your institution’s system of record, and they are also specified in Initiative Analysis. You’ll see an example file image that shows the expected data format and the label reminders will have a note indicating the expected naming conventions at your institution.

Drag and drop the completed file onto the Upload a Student List page or click the Browse link to find the file.

Remember, Initiative Analysis will show you the required format for the student ID and the term identifier. In this example, the student id for this institution is a 5-digit number and the term must be a 6-digit number.

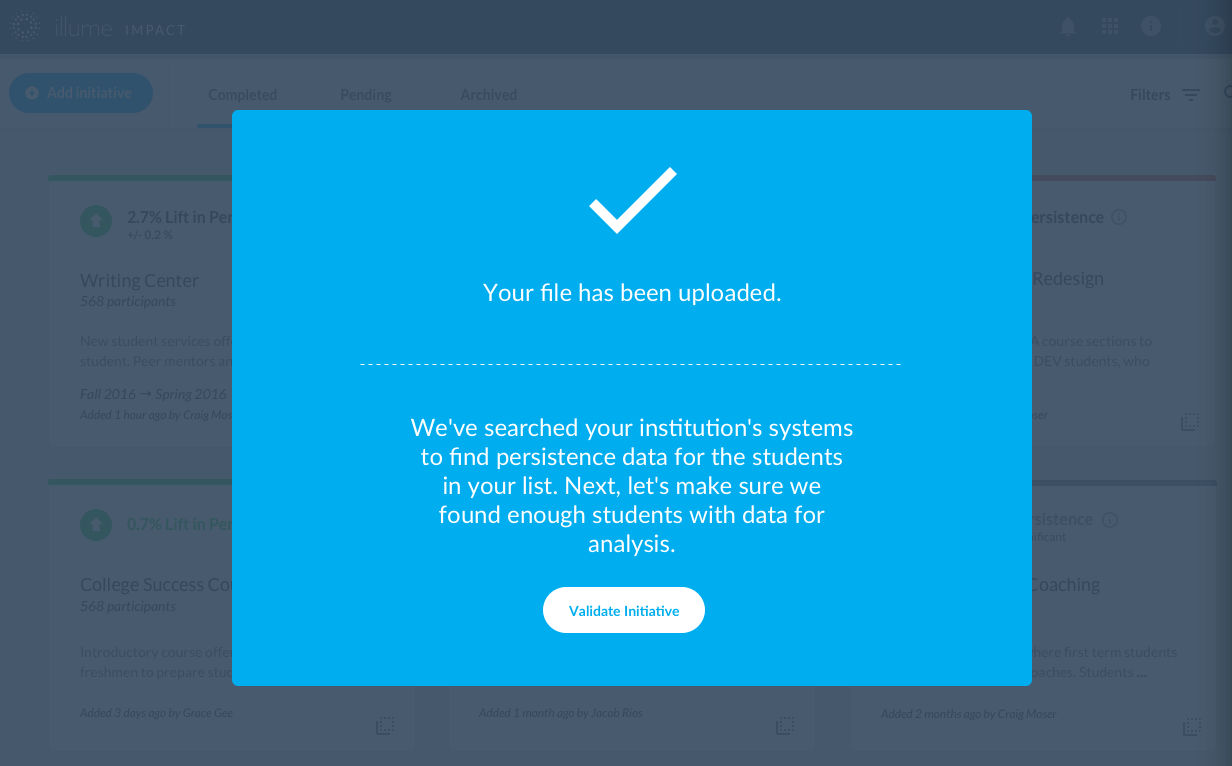

When your file has been successfully uploaded, the file name will be listed with a checkmark to the left of it. Click the Submit button after confirming that your student list was uploaded.

If your file format was correct and persistence data could be found for the students included in the list, you can begin validating the data you submitted before the initiative is analyzed. Click the Validate Initiative button to begin this step.

If your file was formatted incorrectly, you will not be able to move forward with data validation. Click the Back button to upload a new or modified file.

You will see an error message if one of the following issues with your file is identified:

- Column header error: If there are too few or too many columns, or if a column was mislabeled (such as ‘student ID’ instead of ‘student_ID’)

- Wrong data type: If an entry in one of the cells in the student list is formatted incorrectly (such as a text string is found in the ‘is_participant’ column, which should only contain 0 or 1).

Validate the data (Validation Wizard)

After uploading the student list file containing information about students who participated in the initiative and students who were eligible to participate but did not, there are a few data validation steps necessary prior to impact analysis.

Complete these data validation steps to verify:

- The submitted student and term data exists in the historical data shared by your institution

- There are enough eligible comparison students for matching

- There is enough data for realistic detectable effects

For a step-by-step explanation of the data validation wizard, see the Data Validation Guide.

Check overall counts of identified students

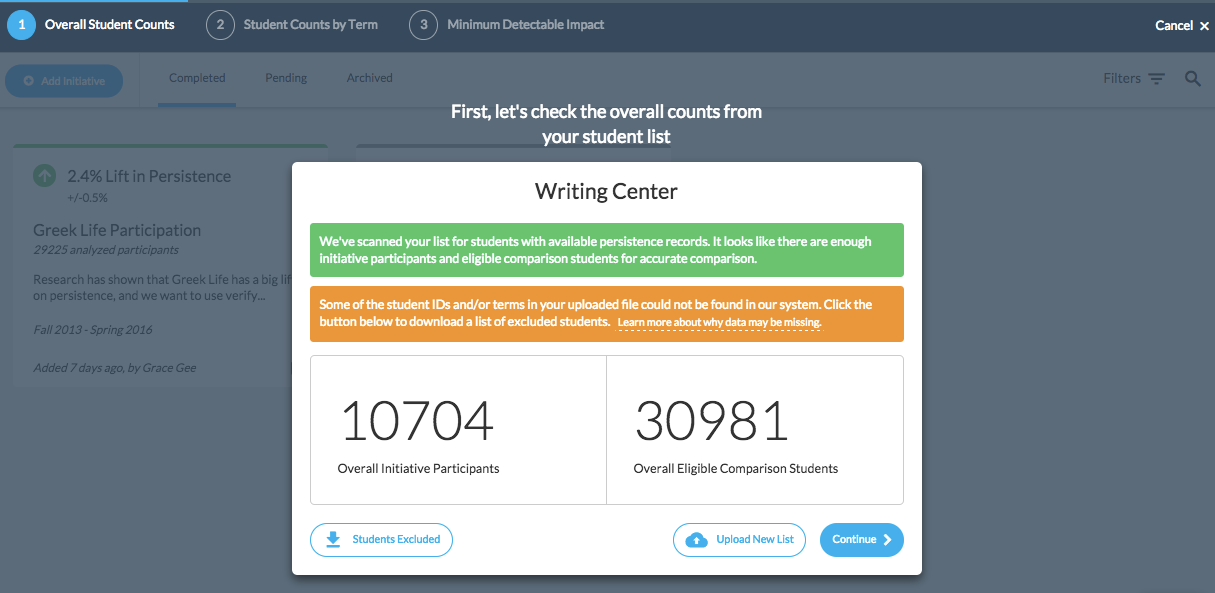

After successfully uploading your student list and clicking Validate Initiative, start by checking the overall counts from your student list:

- Initiative Participants shows the number of students submitted as participants in your student list who were also identified in the Civitas platform. This means that the student records can be found in the data set collected from your source systems (SIS, LMS, etc.) and we have data for these students that can be used for impact analysis.

- Eligible Comparison Group shows the number of students submitted as eligible for the initiative who did not participate who were also identified in the Civitas platform.

- If comparison students were included in the student list file, this count will include students from the list who were identified in your institution’s data set.

- If the initiative was open to all students and comparison students were not included in the student list file, this count will include the total number of students in your institution’s data set over the terms the initiative was offered who did not participate in the initiative. For example, if you uploaded participant data for an initiative offered during Spring and Fall 2021, the eligible comparison group will include all students enrolled during Spring AND Fall 2021 who did not participate.

If any student IDs and/or terms submitted in your list could not be found in the Civitas data set, you will see a warning that these students and/or terms will be excluded from the analysis. Click the Students Excluded button beneath the counts to download a .csv file with the details you uploaded for the students and/or terms that could not be identified in the Civitas data set.

If there are no error messages, you can decide whether to proceed with impact analysis using the identified students and terms or to upload a new list with additional student IDs and/or terms. If student counts are much lower than expected, review Troubleshooting unexpected counts below for guidance on potential errors and fixes. If you have confirmed that none of these issues apply, contact Support.

Click ‘Continue’ to see how the identified students break down over the terms the initiative was offered.

Troubleshooting unexpected student counts

If either of the overall student counts is significantly different from the number of students included in the file, start by verifying these details:

- Does the student list file have a formatting error?

- If your student_id values contain leading 0s, spreadsheet applications like Excel may remove these numbers if the data format is not explicitly defined as ‘text.’

- If your file contains an empty column (such as “1234567,,” or “,,” which may be included at the end of a file when manipulating data in a spreadsheet application like Excel).

- If one of your columns is named incorrectly (such as “studentID” instead of “student_ID”), the file will not be recognized.

- Are the student_id and term values in the correct format?

- Double-check the format expected for your institution. If the format of your list doesn’t meet the requirements, click the ‘Upload new list’ button beneath the counts. You will return to the file upload step. Upload a corrected file at this point to save all the initial information you submitted, including the initiative name and research question.

- Check if the student_id values in your student list should have leading zeros. Some spreadsheet applications automatically remove leading zeros when a file is saved, which could make the student_id values incorrect.

- Are the term values historic terms within the last 4 years where persistence outcomes are already known (such as If the initiative was offered in the most recent past term, and the census date for the current term has passed)?

- Were the students included in the file enrolled on the census date (or day 14 of the term if your institution’s census date has not been provided) of the specified term?

- Does your institution have a non-traditional continuity model? If your institution uses a different definition of persistence, such as persisting from any current term to the next Fall term, it will affect the number of students identified. (Search for “Defining Continuity”.)

Interpreting error messages

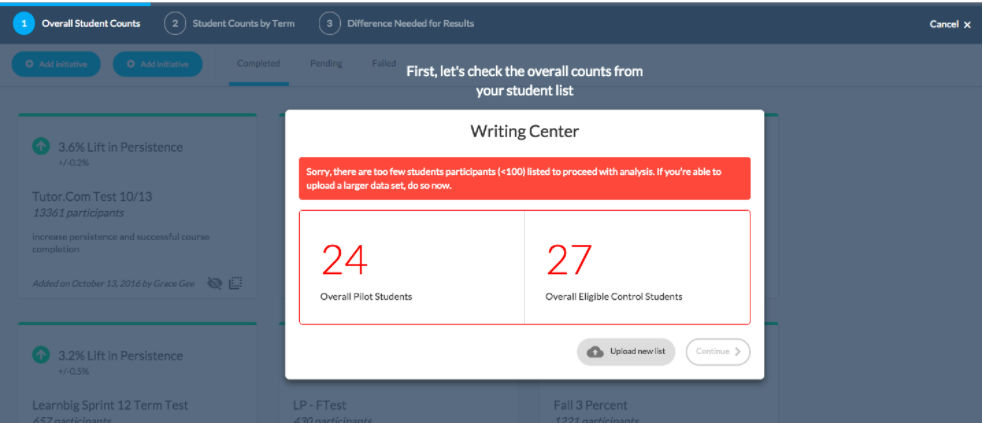

Initiative Analysis requires at least 100 initiative participants for impact analysis.

If you submit a student list with fewer than 100 participants or if fewer than 100 students were identified in the Civitas data set, you’ll see an error message and you will be required to submit a new student list.

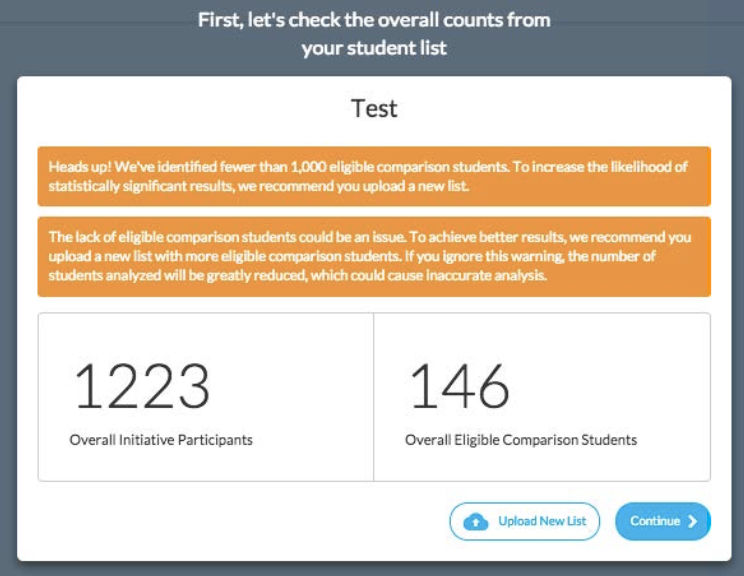

In order to have a higher likelihood of statistically significant results, it is recommended that you submit at least 1,000 initiative participants and 1,000 students for comparison. Submit a revised student list with additional students by clicking the ‘Upload new list’ button.

You will also see a warning if the number of students eligible for comparison who were identified in the Civitas data set is much lower than the number of identified participants. If the number of identified eligible comparison students is less than 80% of the number of identified participants, a warning will appear. Continuing would result in at least 20% of the participants being left out of the analysis, which could skew results.

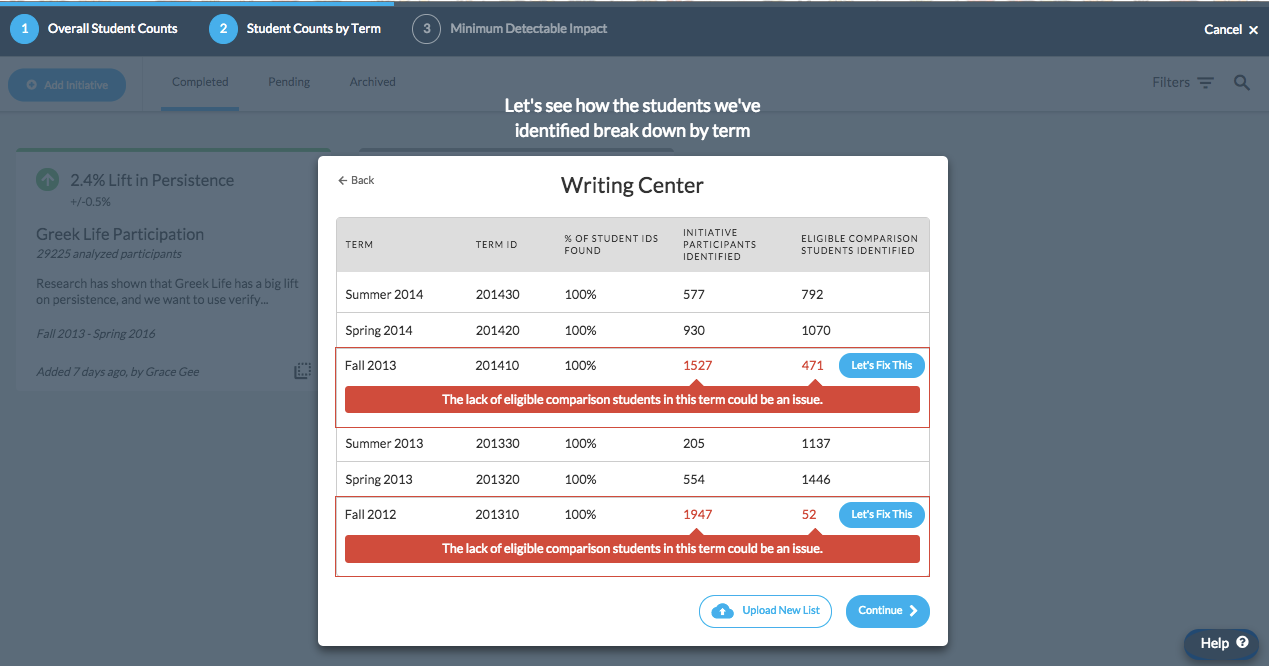

Review counts of identified students by term

After checking the overall student counts, see the breakdown of these students over the terms of data submitted. This table will allow you to see which terms may have limited data and supplement these terms with additional data before proceeding with impact analysis.

For each included term, you can review:

- Term and Term ID: The name of the term the initiative was offered (such as Winter 2021) and the term ID used in your institution’s internal systems.

- % of Student IDs Found: Out of the students submitted in your uploaded list, the percentage of student records identified in the Civitas data set collected from your systems (SIS, LMS, etc.).

- Initiative Participants: The number of participants identified from the student list who participated during the indicated term.

- Eligible Comparison Students: The number of eligible students who did not participate identified from the student list who were eligible during the indicated term.

If the number of identified eligible comparison students for a specific term is less than 80% of the number of identified participants, continuing would result in at least 20% of the participants being left out of analysis, which could skew results. If this is the case for one of your initiatives, you’ll see a warning in red beneath the term:

The lack of eligible comparison students in this term could be an issue.

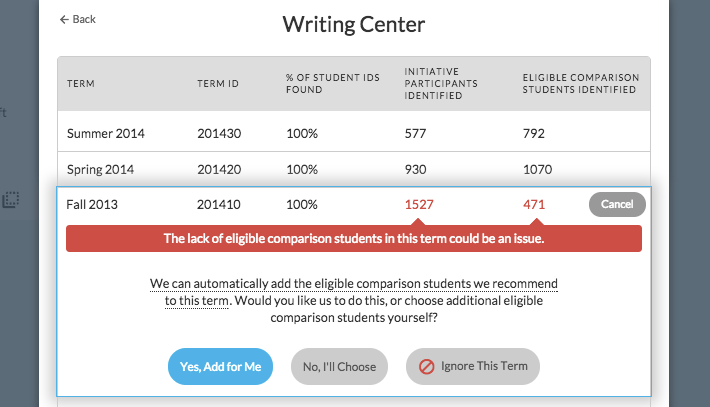

Click ‘Let’s Fix This’ to the right of the term details to add eligible comparison students.

You will see a message introducing the options for fixing the term:

We can automatically add the eligible comparison students we recommend to this term. Would you like us to do this, or choose additional eligible comparison students yourself?

Select from these three options:

- Yes, add for me: Initiative Analysis will automatically add students who met the eligibility criteria during other same-season terms. For example, if Fall 2014 triggered this warning, eligible comparison students will be added from other Fall terms when the initiative was offered. Same-season terms are used to limit confounding factors that could influence analysis, as Fall terms look more similar to other Fall terms than other season terms across institutional operations, student populations, and student outcomes.

- No, I’ll choose: You will add other terms to include in the eligible comparison group. If you specified the eligible comparison group in your student list file, only the terms included in that file will appear as options. If you did not specify the eligible comparison group, all terms within the last four years that are available in your institution’s Civitas data set will appear as options. Click the checkbox to the left of any available term you want to include in the eligible comparison group and finalize your choices by clicking the Add students button.

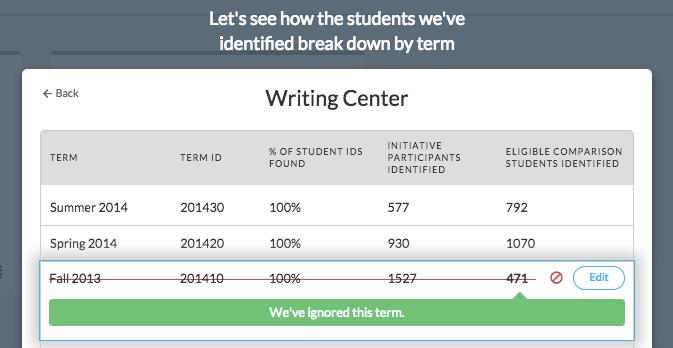

- Ignore this term: This term will be excluded from impact analysis. Excluding a term will result in all student records associated with that term being left out of the analysis. If there are many terms of data and a large population of students available for comparison, this may be fine. If there are very few terms and a small set of students, excluding a term could skew impact analysis results.

If you attempt to ignore all available terms, you will not be able to continue with the analysis. You will be prompted to edit your selections or upload a new list before continuing.

You can continue the analysis without fixing the indicated terms. However, this is not recommended as the number of students analyzed for the indicated term(s) will be greatly reduced, which could cause inaccurate analysis.

When you have reviewed the student counts for each term the initiative was offered, click Continue to finish data validation.

Considerations when comparing students across terms

If the persistence rates across the terms included in analysis are significantly different, there could be significant confounding factors that could make impact analysis inaccurate.

In general, more confounding factors are expected when initiative participants and comparison students are matched across different terms (that is, pre-post matching). Initiative Analysis considers student data that may not account for other factors that could influence student success in different time periods, such as change in global economic factors, effects from different interventions, or policy or programmatic changes at the institution.

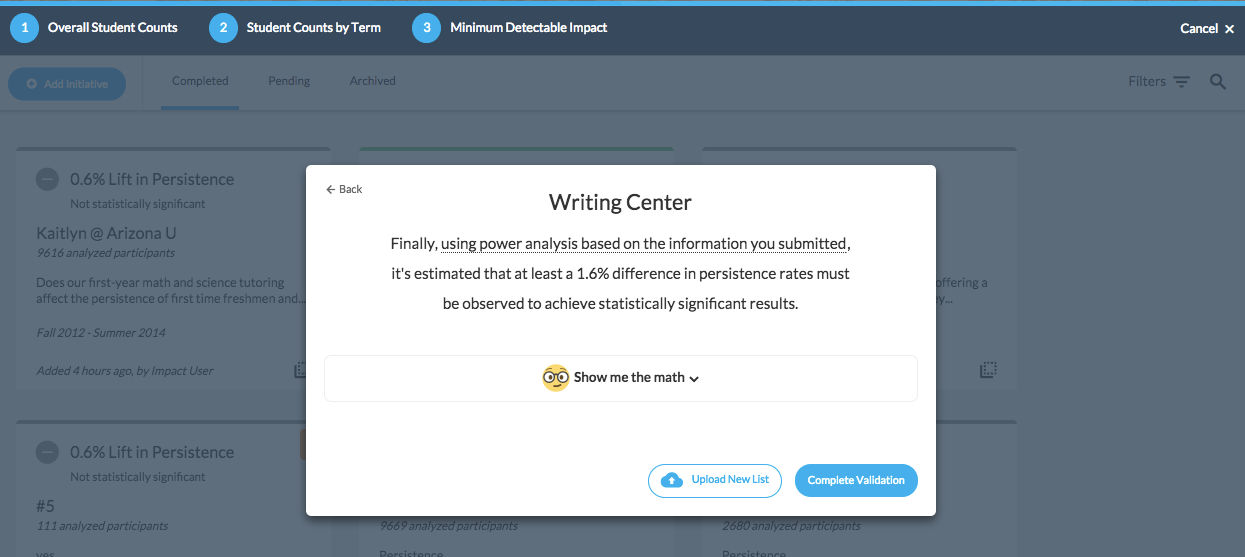

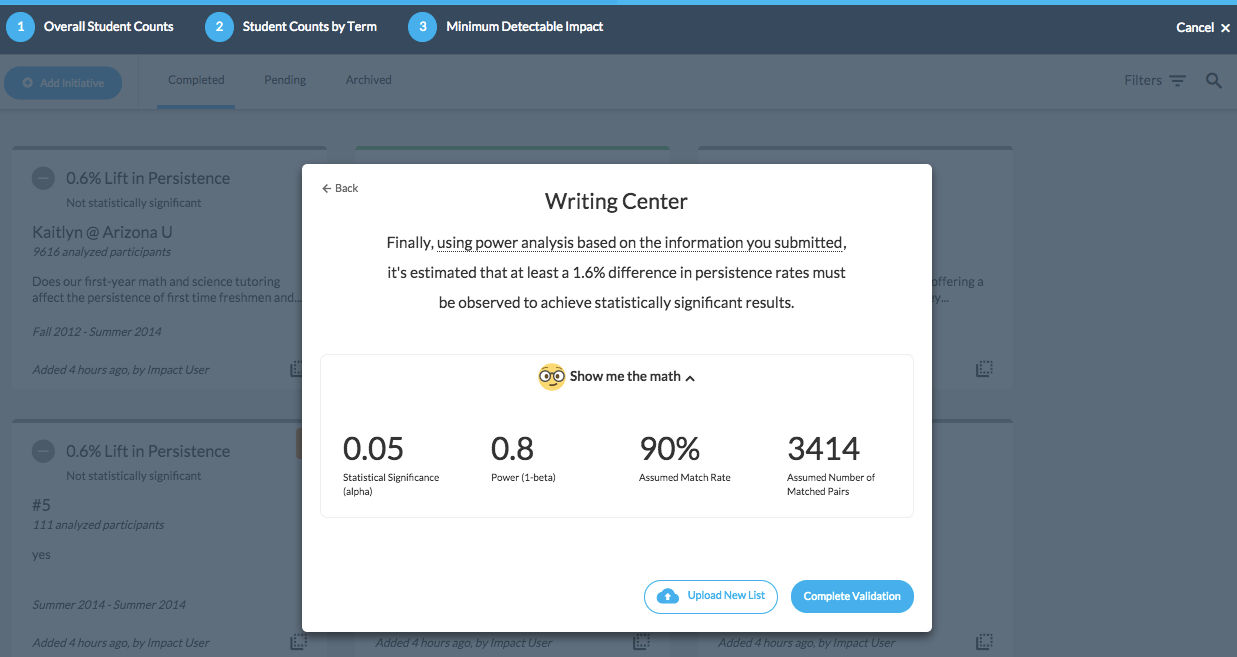

Check power analysis calculation

Before submitting the initiative for analysis, check the estimated minimum detectable impact or effect size that must be observed to see statistically significant results from this initiative.

The minimum detectable impact required for each initiative is determined using power analysis and a few assumptions on the expected match rate. Power analysis is used to estimate the sample size required to reasonably detect the effect of your initiative.

The match rate refers to the assumed percentage of initiative participants that will be matched with a comparable student through PPSM, using the data you submitted. A 90% match rate is assumed for this power analysis calculation. The number of matched pairs for each initiative determines the sample size. As the sample size increases, the minimum detectable impact decreases. A general rule for impact analysis is to provide as large a student population as possible to see a reasonable difference in persistence rates.

Tip: A reasonable change is typically a ≤ 3.0% difference in persistence rates. Observing greater than a 3.0% difference is unlikely.

Click ‘Show me the math’ to see details about the assumptions made to perform this calculation:

- Statistical Significance (alpha): The significance level for the analysis.

- This remains constant at 0.05.

- Power (1-beta): The ability of the analysis to detect the effect of the initiative.

- This remains constant at 0.8.

- Assumed Match Rate: The estimated percentage of students successfully matched through PPSM (actual matching does not occur until you complete validation).

- This remains constant at 90%.

- Assumed Number of Matched Pairs: The estimated number of participant-comparison pairs, this is the estimated sample size.

- This should be equal to the match rate (90%) multiplied by the total number of identified initiative participants.

Click ‘Complete Validation’ when you have reviewed and validated your submitted data for analysis. Initiative Analysis will immediately begin matching participant-comparison pairs through PPSM. This process can take up to a day to complete. The initiative will appear in the Pending tab of the home page until the analysis is complete.